Hello 👋,

I started exploring Rust a week ago due to more and more web services being written in Rust at my work place and so far I like the language, it’s simple, fast and beautiful.

My day to day programming tasks are in Python, Go and Java. I’ve also used C# for some personal projects. Picking up Rust is not that easy for me since everything I write is web services or Java programs that don’t have “low-level” logic.

In this article I will talk about my journey with Rust and my experience of using std::simd. I find things that I don’t have much experience with fascinating. I also tried using SIMD with Go but that’s above my skill level, I could not get it to work.

What is SIMD?

The SIMD stands for Single Instruction/Multiple Data. Given a vector of 128 elements.

[1,2,3, …, 128]

When you’re adding the elements, you will loop through them. A typical code will lock like this:

| |

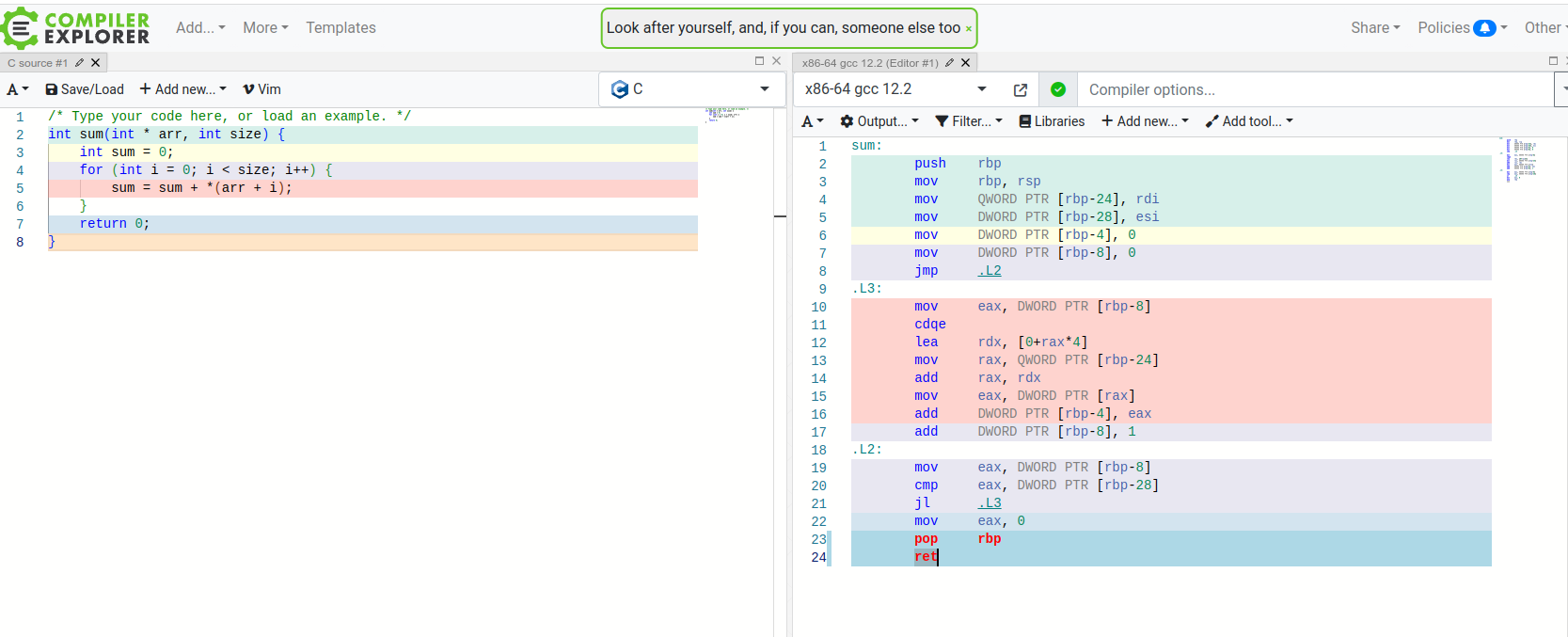

The generated assembly will look something like this:

The code in RED is a the loop part, as you can see we have lots of instructions. Sometimes when the size is known, the compiler may choose to do loop unrolling, this means that instead of using JMP instructions and looping the compiler will do something like this, if the size is 3 for example:

The code in RED is a the loop part, as you can see we have lots of instructions. Sometimes when the size is known, the compiler may choose to do loop unrolling, this means that instead of using JMP instructions and looping the compiler will do something like this, if the size is 3 for example:

| |

With SIMD instructions your CPU has support for assembly instructions that can work with vectors in an efficient manner. You may read more about SIMD and how it works in this excellent article Crunching Numbers with AVX and AVX2.

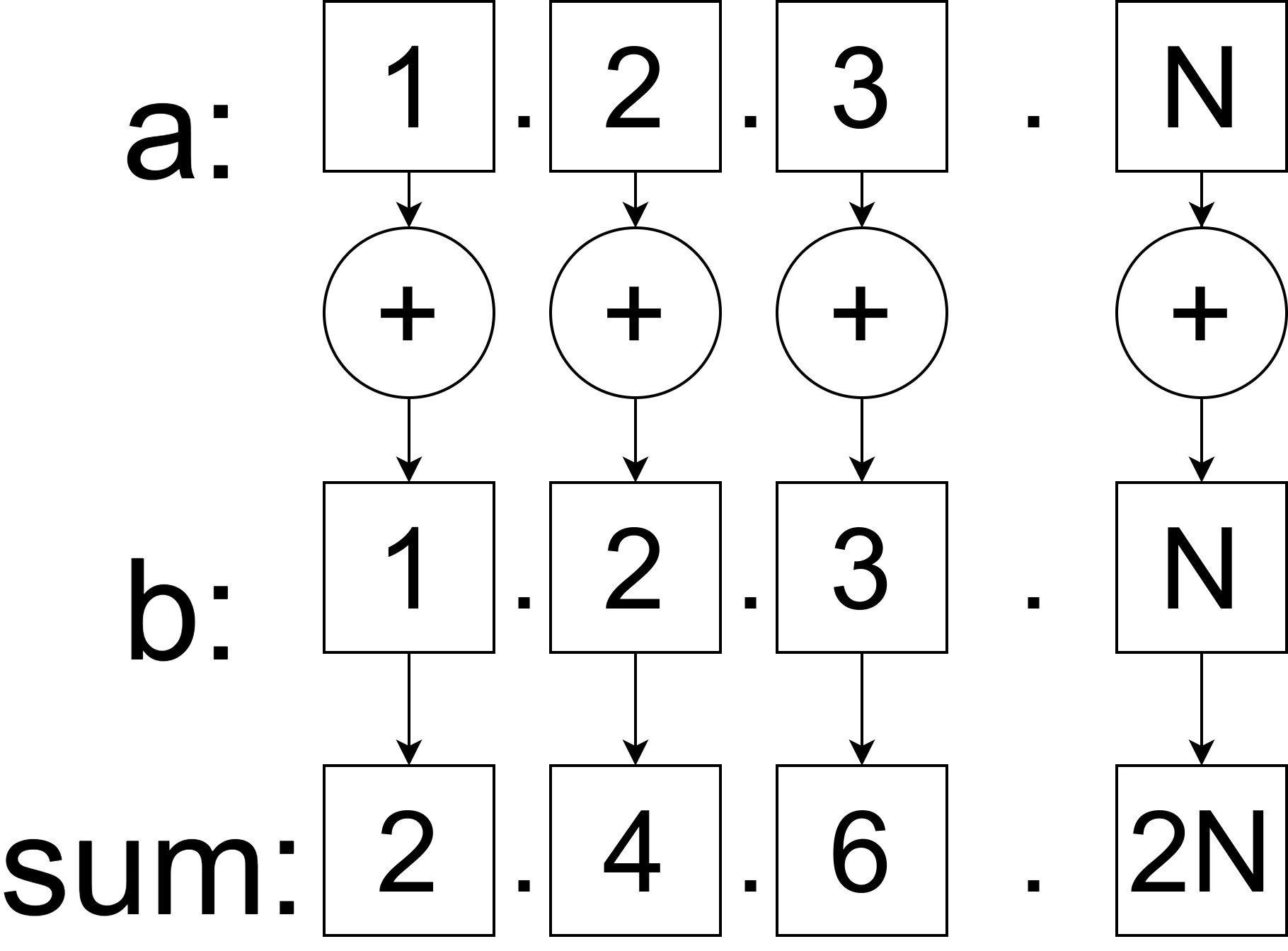

Basically given two vectors a and b you’re telling the compiler to do a mathematical operation on them and it will be efficient. Imagine that instead of looping though each element and executing the + operation; all elements are added at once:

Now that we have a basic understanding of how SIMD works we can move to Rust.

Now that we have a basic understanding of how SIMD works we can move to Rust.

Rust Program to compute hamming distance

Note:

if you want to follow along or run the code from this article you will need to install rust nightly version and set it as default:

| |

The problem we want to solve with SIMD instructions is to compute hamming distance on two vectors equal in size. The hamming distance between [1, 2, 3] and [1, 3, 3] is equal to 1 because 2 != 3, the rest of the elements are equal.

The non-SIMD Rust function looks like this:

| |

To preview the assembly a colleague recommended the cargo-show-asm crate. You can install it and run the following command to show the assembly code for the given function

| |

The generated assembly looks like a simple loop:

| |

Next, I’ll show my implementation that uses simd instructions:

| |

The assembly code for this function looks like this:

| |

We can see that XMM registers are being used.

Benchmarking the code

To benchmark the code I’ve used a block of code that I’ve copied from a Rust SIMD page[1] and modified slightly.

| |

The results are the following. The code written to use SIMD wins:

| |

Inlining the functions

If we remove #[inline(never)] then something interesting happens. The compiler sees that both functions are used only once and it decides to inline them.

If we run cargo asm –bin rust_simd the functions do not appear in the output:

| |

Benchmarking the code again yields the following result:

| |

The vanilla block (classic hamming distance) function is much faster, and if we look at the assembly code we can see that the Rust compiler is smart and it auto vectorized the code to use SIMD instructions, unlike me the compiler is smarter and it’s code is better:

| |

The assembly for the hamming_distance_simd function looks like this:

| |

We can also force a loop unrolling if we hard-code the chunks size in hamming_distance_simd:

| |

Then the assembly will become:

| |

You can see that by unrolling the loop there are exactly 8 code blocks that use SIMD instructions. The performance is slightly better but not by much:

| |

Conclusions

I’m still a beginner rustacean and my journey is just getting started. I’ve tried to outsmart the Rust compiler by using SIMD instructions manually but that didn’t work that well. Rust figured out how to optimize and vectorize the loop code that wasn’t written with SIMD instructions in mind and in the end it gave much better performance.

If you have suggestions on how to improve the code please let me know in the comments. 😀

Thanks for reading! Happy hacking!

Code

https://gist.github.com/dnutiu/8fc529d09d2872595cf2bf23d40e1cd2