Hello 👋,

In this short article I want to talk about parallel processing in Python.

Introduction

Sometimes you will need to process certain things in parallel. If you’re using Python you may know about the global interpreter lock abbreviated GIL for short. The GIL is a lock that allows only a single thread to control the Python Interpreter at a time (per process), that means that your multi-threaded Python program will have only a single thread executing code at the same time.

To overcome the GIL problem, I highly recommend the concurrent.futures module along with the ProcessPoolExecutor which is available since Python 3.2.

The ProcessPoolExecutor comes with some limitations, you can only execute and return objects that can be pickled. The pickle module is used for serializing and deserializing Python objects.

Computing Sums

To demonstrate the use of the ProcessPoolExecutor I wrote a simple program for counting the sum of an array with 1_000_000_000 elemets. On my machine the program executes in 20713ms.

| |

To speed up the program we can execute the code in parallel in multiple processes, instead of computing the sum in a single step we can split it in 100 steps and use the ProcessPoolExecutor to execute the compute sum for each step.

By running the following code:

| |

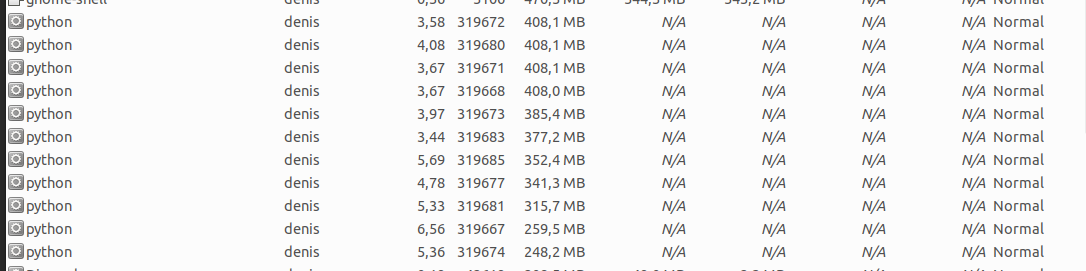

It will start 20 Python processes and each of them will get a task for computing the sum between a start range and stop range:

| |

Compared to the previous code, the one that uses the process pool executor runs only in ~7 seconds. That’s 3x time improvement!

Compared to the previous code, the one that uses the process pool executor runs only in ~7 seconds. That’s 3x time improvement!

Conclusion

Running the program in parallel improved the running time by almost 3X on my machine.

Dividing a problem into sub-problems and solving each problem in a parallel manner is a good way to improve the performance of your programs.

In Python if we run the same code on multiple threads only one thread executes at a time, which defeats the purpose of running on multiple threads. To overcome this limitation we used the ProcessPoolExecutor from the concurrent.futures module to run the code in multiple Python processes and finally we combined the results.

Thanks for reading!